Sony's new line of SLT digital cameras pack on the megapixels. But it might be the mirrors that set the DSLRs apart from the pack. The cameras use translucent mirror technology and electronic viewfinders. "We do not expect Canon or Nikon to adopt these any time soon, as they're more conservative in this aspect of product design," said IDC's Chris Chute.

Sony's new line of SLT digital cameras pack on the megapixels. But it might be the mirrors that set the DSLRs apart from the pack. The cameras use translucent mirror technology and electronic viewfinders. "We do not expect Canon or Nikon to adopt these any time soon, as they're more conservative in this aspect of product design," said IDC's Chris Chute.Sony (NYSE: SNE) has announced two new additions to its SLT-A family of cameras: the A77 and A65.

They offer 24.3MP effective resolution and have what Sony says is the world's first XGA OLED electronic viewfinder.

Both cameras use the translucent mirror technology common to Sony's SLT-A family and offer progressive full HD video recording.

"No other DSLR offers full HD recording in 60p, 50p, 25p, and 24p," digital photographer and film director Preston Kanak told TechNewsWorld.

Sony is unique in using translucent mirrors, remarked Chris Chute, a research manager at IDC.

Sony is unique in using translucent mirrors, remarked Chris Chute, a research manager at IDC."We do not expect Canon (NYSE: CAJ) or Nikon to adopt these any time soon, as they're more conservative in this aspect of product design," Chute told TechNewsWorld.

Sony did not respond to requests for comment by press time.

Tech Specs for the SLT-A Family Additions

The SLT-A77 can capture full-resolution images in bursts at 12 frames per second with full-time phase-detection autofocus. The A65's speed for this is 10 frames a second.

Both cameras use cross sensors with multipoint autofocus systems for precision tracking. The A77 has a 19-point autofocus system with 11 cross sensors, and the A65 a 15-point autofocus system with three cross-sensors.

This lets cameras maintain their focus lock on a designated moving object even if another object blocks it temporarily from view.

"It's not so much the sensors, but the logic that connects them," Rob Enderle, principal analyst at the Enderle Group, told TechNewsWorld.

"The result is supposed to be better focus on complex subjects with subject material dispersed both front and back, and side to side, and the resolution's very high, which should allow for better editing," Enderle elaborated.

The new cameras use Sony's newly developed Exmor APS HD CMOS sensor, which gives them that effective resolution of 24.3MP.

Exmor sensors combine the speed of CMOS sensors with advanced-quality image sensor technologies to provide enhanced resolution for more detailed images.

"With massive improvements in editing tools, the quantity of good data is more important than the quality of any one shot," Enderle said.

The Exmor sensors are teamed with the latest version of Sony's BIONZ image processing engine. This speeds up the conversion of raw image data from the Exmor sensors into the format stored on the camera's memory card.

Sony uses the BIONZ engine in several of its cameras, including those in the DSC family. This video explains the advantages of BIONZ.

Both cameras use what Sony says is the world's first XGA OLED Tru-Finder. This electronic viewfinder has an XGA resolution of 2,360 dots and offers a high-contrast image with 100 percent frame coverage, Sony claims.

The cameras have a Smart Teleconverter feature that lets users see compositions on the Tru-Finder so they don't have to look away from the viewfinder, and capture them as 12MP images.

"One thing that's great about the technology is the new OLED," Kanak said. "We couldn't use the viewfinder to see our compositions with previous technology."

The A77 has a three-way adjustable screen that Sony claims is another world's first.

Other technical details are available here).

Mirror, Mirror on the Wall

The latest developments in Sony's translucent mirror technology make the A77 and A65 the quickest and most responsive interchangeable lens cameras in their class, the company claims.

"This is a brilliant move by Sony to use translucent mirror technology this way, providing weight and speed advantages to its cameras," Enderle said.

Translucent mirror technology replaces optical pentaprisms used in DSLRs with electronic viewfinders, making digital cameras smaller and lighter. Introduced in the 1960s, it was used in specialized high-speed cameras such as the Canon Pelix QL but it was too expensive for mainstream use, Enderle stated.

Competitors to the A77 and A65 are the Panasonic Lumix and the Canon 60D and 7D, Kanak said.

The question now is whether consumers will bite.

"With the A77, Sony is now offering cameras that compete quite effectively against cameras such as the Canon 7D," Carl Howe, director of anywhere consumer research at the Yankee Group, told TechNewsWorld.

"Its only challenge is convincing high-end buyers to switch brands," Howe added.

A report this week that Sprint will soon offer the Apple iPhone on its network has excited the carrier's investors -- Sprint's stock rose about 10 percent on the news. Offering the phone would allow the carrier to better compete with its rivals' device portfolios; however, questions have arisen regarding network strain and how Sprint will handle factors like the phone's high subsidy cost.

A report this week that Sprint will soon offer the Apple iPhone on its network has excited the carrier's investors -- Sprint's stock rose about 10 percent on the news. Offering the phone would allow the carrier to better compete with its rivals' device portfolios; however, questions have arisen regarding network strain and how Sprint will handle factors like the phone's high subsidy cost. The website reform plan is part of a larger federal program to improve customer relations with the public through various means, including the use of innovative technologies. "The federal government has a responsibility to streamline and make more efficient its service delivery to better serve the public," President Barack Obama said in kicking off the customer service initiative earlier this year.

The website reform plan is part of a larger federal program to improve customer relations with the public through various means, including the use of innovative technologies. "The federal government has a responsibility to streamline and make more efficient its service delivery to better serve the public," President Barack Obama said in kicking off the customer service initiative earlier this year. Facebook has given its users a new set of privacy controls, allowing them greater powers to select who sees what and approve tagged posts. The new features appear somewhat similar to those found in Google+, the social network that could prove to become a major Facebook rival. Facebook's changes have received a nod of approval from some privacy advocates.

Facebook has given its users a new set of privacy controls, allowing them greater powers to select who sees what and approve tagged posts. The new features appear somewhat similar to those found in Google+, the social network that could prove to become a major Facebook rival. Facebook's changes have received a nod of approval from some privacy advocates. The big problem with Linux users is their aversion to paying for anything, said tech analyst Rob Enderle -- so for Humble Bumble's developers to get customers to voluntarily pay for Linux games is in itself pretty amazing. ... The Linux derivative OS, Android, might well be the platform for change when it comes to Linux gamers parting with their cash.

The big problem with Linux users is their aversion to paying for anything, said tech analyst Rob Enderle -- so for Humble Bumble's developers to get customers to voluntarily pay for Linux games is in itself pretty amazing. ... The Linux derivative OS, Android, might well be the platform for change when it comes to Linux gamers parting with their cash. As Apple fanatics wait with bated breath for an iPhone 5 announcement, vague reports of a completely new Apple product line bubbled to the surface. Elsewhere, Apple asked iOS developers to give users a little more privacy, and the iPhone may take on yet another U.S. wireless carrier soon.

As Apple fanatics wait with bated breath for an iPhone 5 announcement, vague reports of a completely new Apple product line bubbled to the surface. Elsewhere, Apple asked iOS developers to give users a little more privacy, and the iPhone may take on yet another U.S. wireless carrier soon. "First, back up! It should be off-site, whether in the cloud or in a removable disk that you take to your grandmother's house. It doesn't have to be daily, either -- for most home users, a backup from last month is good enough. Failing that, though, if something happens, you'll lose a part of your life."

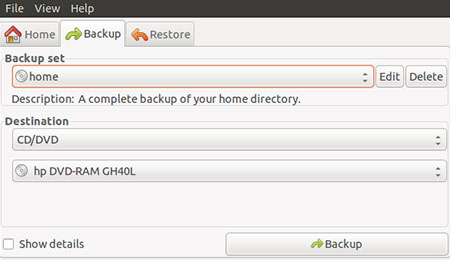

"First, back up! It should be off-site, whether in the cloud or in a removable disk that you take to your grandmother's house. It doesn't have to be daily, either -- for most home users, a backup from last month is good enough. Failing that, though, if something happens, you'll lose a part of your life." Not having a backup strategy for your computers is much like not having anti-intrusion protection. For any solution to work well, you must have a product that provides what you need. Then you must actually use the product regularly. That is what I like about pybackpack. I set it to update my backup file set at specific time intervals. Then if I should need to restore my system files, I click the restore button, and it is done.

Not having a backup strategy for your computers is much like not having anti-intrusion protection. For any solution to work well, you must have a product that provides what you need. Then you must actually use the product regularly. That is what I like about pybackpack. I set it to update my backup file set at specific time intervals. Then if I should need to restore my system files, I click the restore button, and it is done.

Just because a technology has become commoditized doesn't mean that it has lost its vital edge or potential for innovation. What really seems to be IBM's (and now HP's) focus on life after PCs is an emphasis on developing and delivering the IT infrastructure beneath consumer and business computing.

Just because a technology has become commoditized doesn't mean that it has lost its vital edge or potential for innovation. What really seems to be IBM's (and now HP's) focus on life after PCs is an emphasis on developing and delivering the IT infrastructure beneath consumer and business computing.